Counting the costs and benefits in transition to a digital world

Research 25 Jan 2017 8 minute readJuliette Mendelovits and Ling Tan compare the results of paper and online tests.

We live in a digital age – a truism that applies to much of our day-to-day lives. Education is increasingly moving from paper and print to screen and keyboard. The trend is inevitable and in large part welcome, but as educators we need to understand the impact the digital world is having – both positive and negative – on student learning.

One area in which the change from print to digital is being studied closely is in large-scale learning assessments, especially those that attempt to track progress over time. The biggest international survey, Programme for International Student Assessment (PISA), has grasped the nettle and moved almost entirely from paper-based to online assessment over the last decade. The Progress in International Reading Literacy Study (PIRLS) is experimenting in a similar direction. Since a key purpose of these assessments is to measure trends over time, it is essential to understand what the impact is on trend lines of changing from paper-based to digital. ‘If you want to measure change, don’t change the measure’ is a byword of longitudinal assessment – but if you must change the measure, you need to investigate the effect of the change.

Then you are at least able to make an informed judgement as to whether any changes observed in the results are based on actual changes in achievement (for example, maths proficiency), or have more to do with changes in the mode of testing (in this context, a change from print to digital).

The International Schools’ Assessment (ISA) is also faced with this challenge. In response to requests from many international school users, ISA has introduced the option of either paper-based or online testing from 2016 onwards. Ideally, the results would be directly comparable, regardless of the mode of delivery, allowing schools to continue to monitor progress of individual students, classes and whole schools year on year, whether they choose paper or digital as the mode of delivery. But it would be foolish to assume that this ideal is the actuality.

To test the actuality, therefore, the ISA conducted a ‘mode study’ in 2014-15, comparing how students performed on the same tasks in paper and online delivery modes. The results not only have a bearing on the feasibility of continuously monitoring student achievement, but also throw some interesting light on the way students learn and provide some indications about how different modes of presentation could have an effect on teaching processes.

ISA offers assessments of reading, mathematics, writing and science. The first three of these were paper-based only from 2001 to 2015. A separate mode study on writing performance is planned for the future. Science was introduced in 2015 as an online-only assessment, so a mode study in science was not relevant in the context of ISA. We focused for the purposes of this study on reading and mathematics in Grades 3, 5, 7 and 9. The students were taking part in the 2015 ISA Trial Test, which is conducted annually on a voluntary basis by participating schools in order to try out the quality of tasks that may be included in the real tests. These students went on to take the main February 2015 assessments a week or so later. In trial test schools that agreed to take part in the mode study, students were randomly assigned to two groups: one group was given a paper-based test, while the other was given an online test comprising the same tasks. The performance of each group on the tasks was analysed, taking into account their baseline proficiency (judged by their performance on the ISA in previous years), as well as background variables such as gender and mother tongue.

So – what was the outcome?

First, the big picture. For reading, results were broadly comparable in the two modes at all four of the selected grade levels. For mathematics, the same was true for Grades 3, 5 and 9, but in Grade 7, students who took the paper-based assessment did significantly better than those who took the online assessment. What might explain this?

Closer examination of performance across the sequence of the Grade 7 maths test showed that the paper-based and digital groups behaved similarly on most of the test. It was only on the last five tasks – the hardest on the test – that the performance of the digital group declined. This did not appear to be through lack of motivation to complete the tasks: in fact, about the same numbers attempted the tasks in the two modes, right to the end.

One explanation for higher achievement on more difficult tasks when working on paper is that, when solving problems in mathematics, it can be helpful to draw on diagrams and graphs and to make notes – a strategy easy to use when working on paper, but not so easy on a screen. Providing paper for students to work on when they’re operating in an online environment is likely to help, but is not the total solution. Tasks that include complex graphs and tables cannot be reproduced by students on their working paper so cannot be annotated in the way they can in paper tests. And transposing working from paper to screen allows an additional opportunity for error.

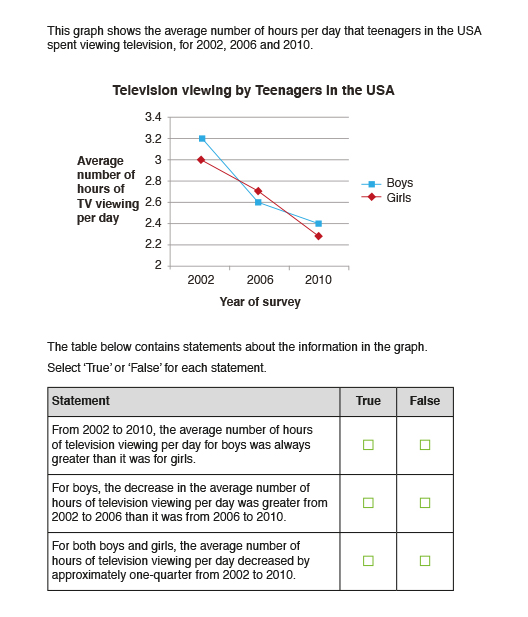

One of the tasks that showed the biggest difference in performance asked students to interpret a graph about television viewing, in the form of evaluating a number of statements as true or false (see below).

This difficult task was answered successfully by almost half of the Grade 7 students who saw it on paper (as shown in Figure 1), but by only just over a quarter of those who saw it on screen. The difference could be due to two factors: one, the inability of the digital group to annotate the graph, and two, the onscreen reading challenge presented by each of the rather wordy three parts of the question.

There’s a lesson here, not only for designers of tests, but also for anyone who wants to convey information, about how dramatically mode can affect our ability to read and deal with complexity. From the more narrow perspective of assessment design, the results showed that this kind of task cannot be treated as equivalent in paper and online versions.

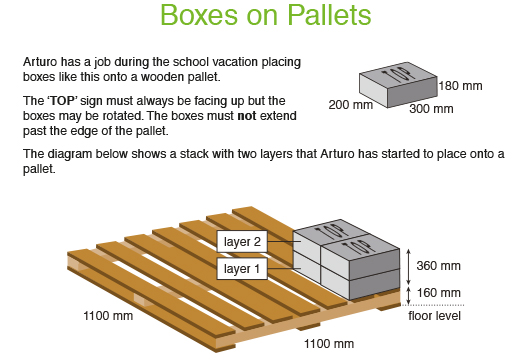

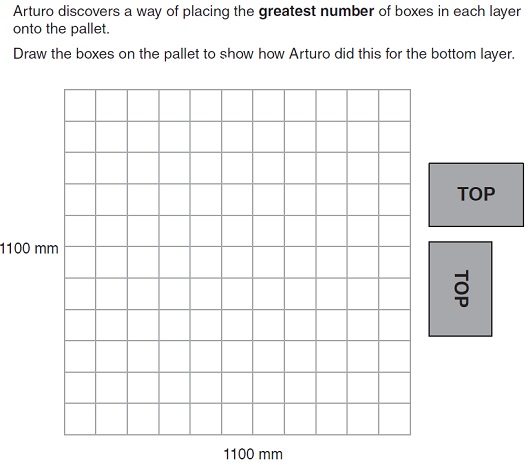

One more example from this study shows that the evidence was not all in one direction: in some cases the digital version was easier than the paper-based one. Another task on the Grade 7 maths test asked students to fit the maximum number of boxes of a specified dimension onto a pallet (see below).

In the paper-based version (shown above), students were asked to draw the boxes onto the pallet, represented by a grid. In the online version, the students had to drag and drop the rectangular box shape (on two rotations) onto the grid. The online version was much easier – presumably because the drag-and-drop strategy allowed quick evaluation of alternative possibilities, whereas in the paper-based version students had to visualise and draw the shapes, sometimes erasing before a solution was found. It seems likely that the online version of this task turned out to be easier because of the utility of the drag-and-drop format. In trial tests for other grades, similar spatial reasoning tasks with a drag-and-drop version in the digital mode were also easy compared with the paper-based version.

The conclusions we have reached from this small research study can be summarised as follows.

In terms of learning strategies, we can infer that, where maths problems are complex students are likely to cope better if they can sketch and annotate freely. On the other hand, where spatial reasoning is involved, the ability to manipulate and experiment with shapes – made possible in the digital environment – aids problem solving.

In terms of test construction, the ISA tests and the selection of tasks will be informed by what we have learned about the specific effects of mode and format on students’ performance, and the strategies they use to respond to tasks. We have evidence that not all tasks are of equal difficulty in different formats. Where tasks behave significantly differently in the two modes, then for measurement purposes they are treated as two different tasks, so that students are not disadvantaged.

In general we can reassure schools that results on the digitally-delivered ISA tests in reading and mathematics are comparable with those from the paper-based tests, and reporting on trends can proceed.

Find out more:

For information about the ISA, contact isa@acer.edu.au

For a copy of the mode study report, contact Ling.Tan@acer.edu.au

This article first appeared in International School magazine, Vol 19(1), 2016.